#@title This video goes with this notebook:

from IPython.display import YouTubeVideo

YouTubeVideo('Wl1NOUHZL08') # https://youtu.be/Wl1NOUHZL08Note: GitHub.com does not render everything when viewing these notbeook files, so click the “Open in Colab” button above to see everything as it’s intended.

Let’s Make an (Ethically Problematic) Image-Analyzer!

by Scott H. Hawley, August 29, 2021, updated Sept 12, 2023

This coding lesson was inspired by two papers:

- “Integrating Ethics into Introductory Programming Classes” by Casey Fiesler et al, SIGCSE ’21: Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, March 2021.

- “Mitigating dataset harms requires stewardship: Lessons from 1000 papers” by Kenny Peng, Arunesh Mathur, Arvind Narayanan, arXiv:2108.02922, cs.LG, August 2021.

Computer vision problems often serve as onramps for learning about deep learning (DL), maybe because it’s an area where DL’s successes have been so demonstrable, and/or also because many of us are “visual learners.” So, there are a few types of apps that we can try to make with images, and I wrote the title as “analyzer” as a deliberately vague ploy to skirt the boundary between two main applications:

classification: what kind of thing is this a picture of? facial recognition: which particular person is this a picture of? Classification is usually covered first, so we’ll do that.

# First, let's install a few things

%pip install -Uqq fastai duckduckgo_search git+https://github.com/drscotthawley/mrspuff.git

%pip install -q bokeh==2.4.3 # downgrade bokeh for now Preparing metadata (setup.py) ... done

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 75.7/75.7 kB 4.5 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 76.0/76.0 kB 7.8 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 57.5/57.5 kB 4.5 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.0/3.0 MB 40.2 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 58.3/58.3 kB 7.1 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.6/1.6 MB 42.9 MB/s eta 0:00:00

Building wheel for mrspuff (setup.py) ... done

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 18.5/18.5 MB 43.8 MB/s eta 0:00:00

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

panel 1.2.2 requires bokeh<3.3.0,>=3.1.1, but you have bokeh 2.4.3 which is incompatible.# and import what we'll eventually need

from fastai.vision.all import *

from fastcore.basics import *

from fastai.vision.widgets import *

import mrspuff

import mrspuff.quiz as quiz

from IPython.display import HTML, display

from duckduckgo_search import ddg_images# And just as a check: what kind of GPU do we have? (Probably a Tesla T4.)

!nvidia-smiWed Sep 13 12:24:32 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.105.17 Driver Version: 525.105.17 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 38C P8 9W / 70W | 0MiB / 15360MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+What shall we build?

What kind of “app” do we want to make? It’s common to do something cute like distinguishing between kinds of animals (dogs and cats, pet breeds, types of bears,…) but since this course is on “Deep Learning and AI Ethics” we’re going to follow Casey Fiesler et al and choose something ethically challenging, which means we use images of people!

How about a system that makes all kinds of inferences about people based on your face? That would be ethically-challenged, right? And such systems have been used to predict criminality, sexual orientation,… it’s really the limit of your imagination:

- How about an app that predicts your child’s future earnings based on a photograph, or maybe some other data? Oh wait. That doesn’t work.

- How about an app that decides how hireable you are based on a photo? Been there, done that. But that gives me an idea…

App Idea: Are You Being a Good Student?

(H/T: The initial idea for this app was suggested by algorithmic bias specialist Vienna Thompkins.)

When I visited the AI in Education (AIED) conference a few years ago, the most advanced systems I observed were (consistently) from various researchers in China who were using deep learning to track student behavior, i.e. to perform automated surveillance for education, whether via analysis of video cameras pointed at the class, or videos from – and this is relevant for us – online education!

As an indicator of the interest in this field, here’s a smattering of some articles on automated surveillance applications in education: * “Engagement detection in online learning: a review” * Monitoring Students’ Attention in a Classroom Through Computer Vision * Developing a Machine Learning Algorithm to Assess Attention Levels in ADHD Students in a Virtual Learning Setting using Audio and Video Processing * A Computer-Vision Based Application for Student Behavior Monitoring in Classroom * Emotion Recognition of Students Based on Facial Expressions in Online Education Based on the Perspective of Computer Simulation * Student Engagement Detection Using Emotion Analysis, Eye Tracking and Head Movement with Machine Learning

…And up until summer 2021, there were multiple Chinese companies offering individualized (spoken) English language tutoring to students in China by connecting them with American instructors via video conferencing. (Like Zoom, but not Zoom.)

Recordings and/or live-streams of these sessions would be routinely scanned by human monitors in China as well as by automated systems to check for violations of Chinese policy (e.g. showing a map where Taiwan was not included as part of China) and for any evidence of students or teachers not exhibiting exemplary behavior.

One thing American instructors found to be a bit odd was that students typically announced very clearly at the start of each session, “I am happy!” Because happy is good. Happy people are good citizens. Happy students are good students. (Source: Personal comments from an instructor I know who’d like to keep his/her job)

#@title Question to check yourself: (press the "Play" button to the left first)

# this is totally sarcastic and hopefully everyone will understand that, and not be too confused ;-)

quiz.mc_widget('Are good students always happy?',['Yes','No'],'Yes')….Good. ;-) Now you get the idea of the sort of app we’re going to make! ;-)

This is from the same country that brought you an ML app that predicts whether you’re likely to be a criminal based on a photo – ok wait, that was from China in 2016, but here’s the same idea from the USA in 2020!

We could perhaps pattern our system after this one:

- “Design of Intelligent classroom facial recognition based on Deep Learning” by Jielong Tang, Xiaotian Zhou, and Jiawei Zheng, Journal of Physics Conference Series 1168(2):022043, Feb 2019.

…Except that they used the FER-2013 dataset, which,…well, here’s a representative sample:

Based on that sample, can you guess the correct responses to the following True/False statements?

#@title Check yourself as you go:

display(quiz.mc_widget('The FER-2013 dataset exhibited a great deal of racial diversity.',['True','False'],'False'))

display(quiz.mc_widget('FER-2013 contained lots of pictures of actual students in either classrooms or online learning environments.',['True','False'],'False'))Furthermore, one might ask, is it even reasonable to expect AI (or humans for that matter) to detect emotions just from photographs?

No it’s not: “YOU CAN’T DETERMINE EMOTION FROM SOMEONE’S FACIAL MOVEMENTS–AND NEITHER CAN AI” (Northeastern U., Aug. 20, 2021).

…But that won’t deter people from making apps that purport to do it, possibly violating laws in the process, so we’re going to do it! …And we’ll inspect how such a (problematic) system might operate – or even how it might completely fail to even deliver on its problematic promises. (Heck, as long as we can sell it to somebody, who cares, right? ;-) <– The winky face means I’m being sarcastic, embodying an ethically-uninformed position one might find in various sectors of industry or even academia.)

So, what should we use for a training dataset?

How/Where to Get Training Data?

The nice thing about FER-2013 is that it already exists. Many a ML project follows the path of, after the initial idea, looking around for “What existing datasets can we grab?” even if those existing datasets have underlying ethical issues. In other words, we could just use somebody else’s dataset that we find “out there” and…just not worry about or ask how it was created – because that’s like asking the AI version of “how the sausage gets made”, yuck! ;-)

How was FER-2013 created? From the original paper:

FER-2013 was created by Pierre Luc Carrier and Aaron Courville. It is part of a larger ongoing project. The dataset was created using the Google image search API to search for images of faces that match a set of 184 emotion-related keywords like “blissful”, “enraged,” etc. These keywords were combined with words related to gender, age or ethnicity,…

Ok, so, they scraped the internet. We can scrape the internet too (spoiler: That is in fact what we’ll do), but let’s talk about this some more. Scraping the public itnernet is not too problematic. Scraping sites where people login and post personal information, e.g. scraping dating sites is a lot more problematic.

Instead of scraping, we could do what researchers at Duke University did, setting up surveillance cameras all over campus and not telling anybody – oh wait, they had to retract all that and apologize…

…even though most universities have a clause that all students sign saying the university can use your photo for anything, anytime…

…let’s just not. Here are a few more questions we might want to think about for the ethical construction of our dataset:

- If we’re academics, then we might want to share a version of our dataset so other people can verify our results. This brings up questions:

- Do we have the license to re-distribute the images? (The maintainers of CelebA confess they do not.)

- If we modify some existing dataset, do we have a licence to redistribute a modified form of that dataset?

- If we’re at a company, we might not want to release our training dataset. In that case, where we get our data from doesn’t matter, right? Even if the training dataset we grab is designated as being for non-commerical use only, who would ever know, right? Hold up:

- Turns out that it’s possible to extract training data from a trained model.

…Darn, all this ethics stuff can be INCONVENIENT – it’s stifling innovation!

For us, we’re going to exercise the usual “education loophole”. What we’re going to do today is for non-commerical, education & research use. We’re going to scrape some images from the public internet, we’re not to redistribute them….and the result is going to be a very poorly-performing model!!!

Datasets are a big deal, it turns out

Often machine learning pedagogy starts with “we’re just going to grab XXXX dataset in order to illustrate this model/concept.” And it’s innocuous as flowers. But in real life, you have to make your own dataset, and you have to “clean” your own dataset, and you have to make sure you aren’t violating ethical principles or licensing concerns. Getting training data is the less-talked-about “underside” of machine learning and especially deep learning. But it’s starting to receive more attention.

And by the way, we haven’t even talked about how problematic the labels can be for supervised classification projects, even for some of the most carefully curated datasets.

…I think you’re starting to get the idea that even the act of creating some kind of facial-recognition app is fraught from the get-go, just over the issue of the dataset itself. It’s possible that these ethical issues may cause you to even abandon the field of computer vision research.

But hey, some ethically-challenged person’s going to do it, and you don’t want to give the Chinese an edge in AI development, do you?

2023: Scraping Data from the Internet is No Longer a Given

It used to be that “scraping the internet” for data – usually text and/or images – was a common practice, accepted by ML researchers & and companies.

Today, if you do a web search on “ethics of data scraping 2023” you will see numerous articles discussing changes in public attitudes about this, and legal cases involving data scraping.

Usually, scraping for research or educational purposes was (and still is) largely uncontested.

The problems usually arise in regards to using the data to build commercial products.

This is such an important, changing topic, that we will devote further attention to later in the course. For now, we’re going to move on to just doing it anyway (we said this would be ethically problematic, right?), and revisit the topic of the ethics and legality of using scraped data at a later date.

Make Your Own Dataset

“Anyone working in ML, anyone, should be obliged to curate a dataset before they’re allowed to train a single model. The lessons learnt in the process are invaluable, and the dangers of skipping said lessons are manifold (see what I did there?)”

–Justin Salamon, Senior research scientist at Adobe Research. (Machine learning and signal processing for audio & video.)

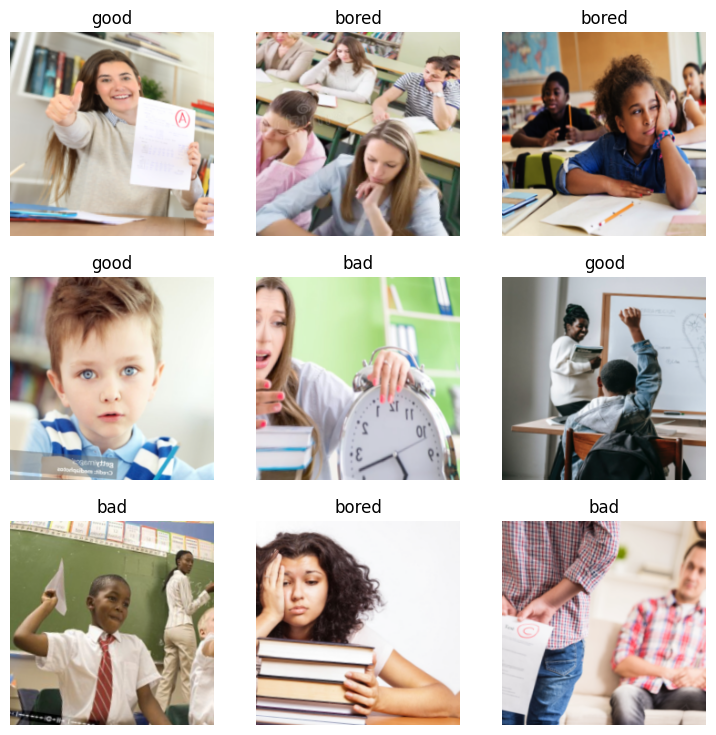

We’re going to scrape DuckDuckGo for images of three categories of students:

- good student

- bad student

- bored student

Note: We will be performing supervised learning by letting the search engine supply the ground-truth labels for our deep learning model.

These will then feed into our social credit system (beyond the scope of this course): bad students will be penalized, and “bored student” will mean the teacher will be penalized! Let’s see how (toally awful) this design plays out:

Now let’s tell it to scrape…

dl_path = 'scraped_images' # the folder where we're saving to

labels = 'good','bad','bored'

search_suffix = 'student'

path = mrspuff.scrape.scrape_for_me(dl_path, labels, search_suffix, max_n=200)/usr/local/lib/python3.10/dist-packages/duckduckgo_search/compat.py:60: UserWarning: ddg_images is deprecated. Use DDGS().images() generator

warnings.warn("ddg_images is deprecated. Use DDGS().images() generator")

/usr/local/lib/python3.10/dist-packages/duckduckgo_search/compat.py:64: UserWarning: parameter page is deprecated

warnings.warn("parameter page is deprecated")

/usr/local/lib/python3.10/dist-packages/duckduckgo_search/compat.py:66: UserWarning: parameter max_results is deprecated

warnings.warn("parameter max_results is deprecated")good student: Got 90 image URLs. Downloading images...

urls = ['https://www.hiboox.com/wp-content/uploads/2020/02/student-1.jpg', 'https://lindastade.com/wp-content/uploads/2019/03/shutterstock_102482084-e1552480105382.jpg', 'https://www.schoolsofdehradun.com/wp-content/uploads/2019/08/QUALITIES-OF-A-GOOD-STUDENT.jpg', 'https://www.pitmanroaringtimes.com/wp-content/uploads/2018/11/100602603.jpg', 'https://i1.wp.com/rollercoasteryears.com/wp-content/uploads/Thrive-During-Finals-.jpg?fit=1000,667&ssl=1', 'https://yourteenmag.com/wp-content/uploads/2013/06/Depositphotos_24196475_l-2015.jpg', 'https://stemeducationguide.com/wp-content/uploads/2021/05/What-Makes-A-Good-Student.jpg', 'https://images.saymedia-content.com/.image/t_share/MTc2MjY5ODExMDQ1NjM5MzQx/to-be-an-ideal-student.jpg', 'https://www.ucg.org/files/styles/full_grid9/public/image/article/2011/07/15/five-qualities-of-successful-students.jpg', 'https://img.freepik.com/free-photo/good-student-posing-with-notebook-backpack_23-2147650748.jpg?size=626&ext=jpg', 'https://www.waterford.org/wp-content/uploads/2019/04/iStock-610839440.jpg', 'https://updatedeverything.com/wp-content/uploads/2020/01/Qualities-of-A-Good-Student-1.jpg', 'https://www.themoviedb.org/t/p/w1066_and_h600_bestv2/o5ASl6gOpO9zCHU42vdFOoExBGo.jpg', 'https://img-aws.ehowcdn.com/600x600p/photos.demandstudios.com/getty/article/50/56/dv740020.jpg', 'https://files.brainfall.com/wp-content/uploads/2015/10/are_you_a_good_student_featured.jpg', 'https://cdn10.bigcommerce.com/s-ehq9w/products/4688/images/9596/cm1225_WM_THUMB_Seven_ways_to_be_a_good_student__94274.1493678783.1280.1280.jpg?c=2', 'https://i.pinimg.com/originals/c7/2a/35/c72a35d4f0af956c649948d7f20e4686.jpg', 'https://blogs.staffs.ac.uk/student-blogs/files/2016/08/iStock_28423686_MEDIUM.jpg', 'https://www.lifezette.com/files/2017/07/studying1.jpg', 'https://www.ahschool.com/uploaded/faculty/sarah/high-school-grades.jpg', 'https://www.irs-restoration.com/wp-content/uploads/2018/03/2018-03-IRS-Healthy-Schools.jpg', 'https://thumbs.dreamstime.com/b/good-student-smiling-schoolgirl-engaged-table-books-isolated-over-white-40585265.jpg', 'https://static-us-east-2-fastly-a.www.philo.com/gracenote/assets/p194462_v_h10_ac.jpg', 'https://thefrisky.com/wp-content/uploads/2018/11/Successful-Students-1.jpg', 'https://i.ytimg.com/vi/ge0VVCmsM2M/maxresdefault.jpg', 'http://brocksacademy.com/wp-content/uploads/2013/09/Studying.jpg', 'https://www.cdacouncil.org/storage/CounciLINK/April_2019/STUDY_STUDENTS_WITH_GOOD_GRADES_ARE_MORE_POPULAR_SECURE.jpg', 'https://www.oxfordlearning.com/wp-content/uploads/2017/04/AdobeStock_132869690-1024x683.jpeg', 'https://i.ytimg.com/vi/G_angLL4FeE/maxresdefault.jpg', 'https://busyteacher.org/uploads/posts/2012-09/1347467411_untitled-1.png', 'https://neeadvantage.com/wp-content/uploads/2021/01/pexels-katerina-holmes-5905557-scaled.jpg', 'https://i.ytimg.com/vi/foKCuCA6Zf4/maxresdefault.jpg', 'https://image.tmdb.org/t/p/w500/uOmBrbZBABF7G3F0QaaJJURJGCw.jpg', 'https://massappleseed.org/wp-content/uploads/2020/02/classroom-Copy.jpg', 'https://quotefancy.com/media/wallpaper/3840x2160/2351588-Marva-Collins-Quote-The-good-teacher-makes-the-poor-student-good.jpg', 'https://quotefancy.com/media/wallpaper/3840x2160/1181304-Marva-Collins-Quote-The-good-teacher-makes-the-poor-student-good.jpg', 'https://media.gettyimages.com/photos/good-student-picture-id143922844', 'https://www.sparkadmissions.com/wp-content/uploads/2020/04/How_to_Get_Good_Grades_in_High_School.jpg', 'https://img.haikudeck.com/mg/eDS1jHpHu6_1401974637587.jpg', 'http://4.bp.blogspot.com/-1ui5wVV-BSk/UaXW--RRtEI/AAAAAAAACaw/3RxA9rPi7aw/s1600/A+GOOD+STUDENT.jpg', 'https://i.pinimg.com/originals/55/a7/31/55a731e081df0ad79b34d5f979d74993.jpg', 'https://leverageedu.com/blog/wp-content/uploads/2021/06/Qualities-of-a-Good-Student-800x500.png', 'https://i.pinimg.com/736x/df/3c/b8/df3cb8181c9429f40a14d4e1148dd3ba.jpg', 'https://i.ytimg.com/vi/vzfQhHN3zrc/maxresdefault.jpg', 'https://www.thoughtco.com/thmb/MVaVaaIGSRwC5ctwLFBNwFYZiW4=/4901x3267/filters:fill(auto,1)/group-of-school-kids-raising-hands-in-classroom-860597978-5ab59c403418c600365665f3.jpg', 'https://myuniversitymoney.com/wp-content/uploads/2012/02/reportcardsmall-300x200.jpg', 'https://www.frankbuck.org/wp-content/uploads/2012/04/Pretty_Good_Student.jpg', 'https://4.bp.blogspot.com/--AtvVqVQouM/V_4a-mGUyrI/AAAAAAAAAiw/8pJ5_fR3ykYynYvGQvkmYtdXlyFPEi71QCLcB/s1600/a-good-student-is-special.jpg', 'https://schoolandtravel.com/wp-content/uploads/2020/11/Blog-Thumbnail182.jpg', 'https://static.vecteezy.com/system/resources/previews/000/297/443/original/vector-a-set-of-good-students.jpg', 'https://quotefancy.com/media/wallpaper/3840x2160/4749463-Marva-Collins-Quote-The-good-teacher-makes-the-poor-student-good.jpg', 'https://www.thoughtco.com/thmb/unHUlBJ9XtGg7u5rY5gWwK3lhL8=/3000x2000/filters:fill(auto,1)/perfect-student-characteristics-4148286_final-ab0f5265c6574d66bfc368c627956530.png', 'https://www.aplustopper.com/wp-content/uploads/2020/07/10-Lines-about-Ideal-Student.png', 'https://www.continentalpress.com/wp-content/uploads/2019/05/iStock-480507030.jpg', 'https://image.isu.pub/160326150841-19af7cc7aa2fb6087209636eb884ffa3/jpg/page_1.jpg', 'https://www.daniel-wong.com/wp-content/uploads/2016/05/Students.jpg', 'https://goodtimes.ca/wp-content/uploads/2018/01/Mature-students.jpg', 'https://www.homeworkhelpglobal.com/wp-content/uploads/2019/03/studying-student-on-desk.jpg', 'https://www.marshallsterling.com/sites/default/files/styles/d12/public/news-images/good-students.jpg?itok=ppKFhrI5', 'https://rksmartlife.com/wp-content/uploads/2020/03/Qualities-Of-A-Good-Student-1-1.png', 'https://i.ytimg.com/vi/1KofiHFsBQk/maxresdefault.jpg', 'https://www.ashishchahar.com/wp-content/uploads/2021/05/how-to-be-a-good-student.png', 'https://i.ytimg.com/vi/FJw3-lUsBgI/maxresdefault.jpg', 'https://i.pinimg.com/originals/4e/2d/70/4e2d70acff6d2e0be9d0b8c75579ec6f.png', 'https://christbytheseanb.org/wp-content/uploads/2020/02/sermon_7.jpg', 'https://i.ytimg.com/vi/obEg0dAWkZo/maxresdefault.jpg', 'http://theweeklychallenger.com/wp-content/uploads/2016/02/college-students.jpg', 'https://1.bp.blogspot.com/-mOeBYk5B3XQ/XWNBPi-ml9I/AAAAAAAAALw/12QsjpzkvHAQccApxRnHtnTX2TWs1kxXACLcBGAs/w1200-h630-p-k-no-nu/blog+14.jpg', 'https://papercheap.s3.amazonaws.com/good-student.jpg', 'https://www.responsiveclassroom.org/wp-content/uploads/2015/04/Windom011.jpg', 'http://img.picturequotes.com/2/228/227965/a-good-student-is-one-who-will-teach-you-something-quote-1.jpg', 'https://image.freepik.com/free-photo/good-students-class_23-2147650717.jpg', 'https://image.slidesharecdn.com/goodstudent-110217000515-phpapp02/95/good-student-1-728.jpg?cb=1297901156', 'https://thumbs.dreamstime.com/b/good-student-portrait-smart-ten-years-girl-big-glasses-book-isolated-over-white-35904103.jpg', 'https://www.missbsresources.com/images/Blog/happy_math_student.jpg', 'https://statc.lumoslearning.com/llwp/wp-content/uploads/2014/01/7habits1.jpg?x30257', 'https://showme.missouri.edu/wp-content/uploads/2020/08/08142020-Students-940x529.jpg', 'https://2.bp.blogspot.com/-7AXo9QiKguY/V_QW22mL3zI/AAAAAAAAH1M/tKya5ivTAZ8kuj3j3XKsAA1zOhFnpX1OgCLcB/s1600/10-ways-354x500.png', 'https://i.ytimg.com/vi/7ML13OsAY_w/hqdefault.jpg', 'https://research.tamu.edu/wp-content/uploads/2019/11/iStock-1031377384-1536x1024.jpg', 'https://shop.dkoutlet.com/media/catalog/product/cache/2/image/1800x/040ec09b1e35df139433887a97daa66f/C/D/CD-114249_L.jpg', 'https://rksmartlife.com/wp-content/uploads/2020/09/10-Qualities-of-a-Good-Student-1.jpg', 'https://thumbs.dreamstime.com/b/young-good-student-school-girl-writing-essay-lesson-pretty-girl-trying-to-be-72610101.jpg', 'https://1.bp.blogspot.com/-OVJRx44tC58/TgcsYSQxS6I/AAAAAAAAAD0/zEzp7I8OTd4/s640/thesuccessfulstudent.gif', 'https://mytopschools.com/wp-content/uploads/2019/02/Steps-To-Becoming-A-Good-Student-o3schools.jpg', 'https://thumbs.dreamstime.com/z/good-student-9799619.jpg', 'http://images.myshared.ru/20/1241459/slide_9.jpg', 'https://www.sochi.edu/images/news/good-student-at-home.jpg', 'https://www.quizony.com/am-i-a-good-student/imageForSharing.jpg', 'https://w7.pngwing.com/pngs/120/419/png-transparent-student-school-star-teacher-good-student-s-text-class-logo.png']

Images downloaded.

bad student: Got 90 image URLs. Downloading images...

urls = ['https://www.washingtonpost.com/wp-apps/imrs.php?src=https://arc-anglerfish-washpost-prod-washpost.s3.amazonaws.com/public/VZ4C3PQ5WEY3VG2ROZ4KUMZ4MA.jpg&w=440', 'https://images.theconversation.com/files/92936/original/image-20150825-15912-vnio5s.jpg?ixlib=rb-1.1.0&q=45&auto=format&w=926&fit=clip', 'https://dm0qx8t0i9gc9.cloudfront.net/thumbnails/video/NdHffr7_eijh7icah/videoblocks-a-bad-student-is-playing-with-smartphone-during-class-under-the-desk-hiding-from-the-teacher-the-teacher-observes-how-the-student-is-note-taking-lecture-plays-on-a-smartphone_shbxa4h1e_thumbnail-1080_01.png', 'https://www.jamesgmartin.center/wp-content/uploads/2018/02/Fotolia_61768987_Subscription_Monthly_M-1200x800.jpg', 'https://www.arnoldpalmerhospital.com/-/media/images/blogs/illuminate/2017/studentbehavingbadly900-gettyimages-472977148.jpg', 'https://s-i.huffpost.com/gen/1334211/images/o-BAD-STUDENT-facebook.jpg', 'https://ichef.bbci.co.uk/news/1024/branded_news/24D5/production/_95292490_classroombadbehaviourthinkstock.jpg', 'https://s-i.huffpost.com/gen/1637050/images/o-DV1644042-facebook.jpg', 'https://s-i.huffpost.com/gen/1117786/images/o-BADCLASSROOMHABITS-facebook.jpg', 'https://www.verywellfamily.com/thmb/Cl7qmcPM5yrcjaPrTalWdy2TLiw=/2121x1414/filters:fill(DBCCE8,1)/GettyImages-157672984-59cff07e685fbe0011ebe775.jpg', 'https://media.istockphoto.com/photos/bad-student-learning-with-difficulties-picture-id622182790', 'http://i2.cdn.turner.com/cnn/2011/TECH/web/01/28/bad.student.writing/t1larg.bad.student.writing.jpg', 'https://stuckismus.de/wordp/wp-content/uploads/2021/03/understanding-inappropriate-behavior-900x600.jpg', 'https://hemispheretravel.com/images/news/bad-student-behavior-enews.jpg', 'https://www.washingtonpost.com/wp-apps/imrs.php?src=https://arc-anglerfish-washpost-prod-washpost.s3.amazonaws.com/public/IN2WXNXXJMI6VBPXLFARRCUYZU.jpg&w=1440', 'https://atlantablackstar.com/wp-content/uploads/2014/10/Untitled4.jpg', 'https://i.dailymail.co.uk/i/pix/2011/02/28/article-1361289-0B00E071000005DC-550_468x317.jpg', 'https://media.istockphoto.com/vectors/bad-student-vector-id507490311?k=6&m=507490311&s=170667a&w=0&h=4Zurfyo4_K5K2aFMxNRx8Y6Ipq8frTCKCTO5DIecRY0=', 'https://static.seattletimes.com/wp-content/uploads/2015/05/bd0b7c4a-f8cd-11e4-b106-bd39ccd745b8-1560x1094.jpg', 'https://fijisun.com.fj/wp-content/uploads/2017/11/teacher_helping_kids-750x403.jpg', 'https://media.istockphoto.com/vectors/bad-student-character-vector-flat-cartoon-illustration-vector-id586192316?k=6&m=586192316&s=170667a&w=0&h=I5yVFeeH6lTJBZcPC2J-BRtfsuieWgVntbTIqjq8rbM=', 'https://s.yimg.com/cd/resizer/2.0/FIT_TO_WIDTH-w1024/254ae95072c8229452084a6f92a30374cb547ad4.jpg', 'https://www.greatschools.org/gk/wp-content/uploads/2012/03/Bad-classroom-750x325.jpg', 'https://media4.s-nbcnews.com/i/streams/2013/February/130209/1B5931659-g-tmoms-130208-boy-student.jpg', 'http://media.npr.org/assets/img/2011/06/23/badteaching-56f36554c33013905b35f046fadd87cd3f921f56.jpg?s=6', 'https://i.dailymail.co.uk/i/pix/2010/11/18/article-1330868-0B78415F000005DC-347_468x459.jpg', 'https://cdn.vox-cdn.com/thumbor/trLx4pEpSKTdPpc6q0oMCc7zmeQ=/0x0:5760x3238/1200x0/filters:focal(0x0:5760x3238):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/676314/shutterstock_160640804.0.jpg', 'http://media.philly.com/images/badclassroom.jpg', 'https://images-na.ssl-images-amazon.com/images/I/51FLJ0-jPFL._SY291_BO1,204,203,200_QL40_.jpg', 'https://static01.nyt.com/images/2016/06/20/opinion/20mon2web/20mon2web-videoSixteenByNineJumbo1600.jpg', 'https://media.istockphoto.com/photos/teacher-checking-sleeping-student-for-a-pulse-picture-id123466509?k=6&m=123466509&s=612x612&w=0&h=tbhM1ng_RmPFUk1X3U3o56WwEvJ1A8bhK1Fy7wjwWKI=', 'https://clueylearning.com.au/wp-content/uploads/2019/06/student_getting_bad_result.jpg', 'https://cdn2.vectorstock.com/i/1000x1000/62/66/sad-student-looking-at-test-paper-with-bad-grade-vector-20426266.jpg', 'https://static01.nyt.com/images/2014/04/24/arts/24BADTEACHER1/24BADTEACHER1-videoSixteenByNine1050.jpg', 'https://nursinghomeworks.com/wp-content/uploads/2020/10/time-management-thenurselink-1024x684-1.jpg', 'https://media.istockphoto.com/vectors/sad-student-looking-at-test-paper-with-bad-grade-vector-id944750686?k=6&m=944750686&s=612x612&w=0&h=_j_NJtqw9w0BkSWTYLCuBFZo-mAQ_14JVr32rpMxERM=', 'https://straightfromthea.com/wp-content/uploads/2014/05/bad-teacher-straightfromthea.gif', 'https://assets-homepages-learning.3plearning.net/wp-content/uploads/2019/09/blog-motivate-students-without-feeling-like-bad-guy.png', 'https://www.longwood.edu/media/lancer-card/public-site/bad-vs-good-photos-640x384.png', 'https://cdn3.vectorstock.com/i/1000x1000/58/37/sad-student-looking-at-test-paper-with-bad-mark-vector-13755837.jpg', 'https://media.istockphoto.com/vectors/guilty-student-boy-and-teacher-vector-id636071146?k=6&m=636071146&s=612x612&w=0&h=WWgo3D80Cwi3BzjUwjKQg_2XVFRcHl_iHLogmZhMPac=', 'https://i.gr-assets.com/images/S/compressed.photo.goodreads.com/books/1403613054i/8432644._UY630_SR1200,630_.jpg', 'https://media.istockphoto.com/vectors/school-bad-behaviour-vector-id894983104?k=6&m=894983104&s=170667a&w=0&h=-HVC3WR7VfiJVlorYUTvlDUum1R3zCknWSe6rzyf4fA=', 'http://m.wsj.net/video/20130115/011513lunchlab/011513lunchlab_1280x720.jpg', 'https://www.teachertoolkit.co.uk/wp-content/uploads/2019/01/shutterstock_1182248935.jpg', 'https://i2.wp.com/www.additudemag.com/wp-content/uploads/2016/11/School.Behavior.Improve_listening_skills_in_ADHD_kids.Article.5934A.girls_passing_notes.ts_77137314-3.jpg?resize=1280,720px&ssl=1', 'http://news.stanford.edu/news/2015/april/images/15079-discipline_news.jpg', 'https://stanfield.com/wp-content/uploads/2018/04/teacher-reprimanding-student.jpg', 'https://i.ytimg.com/vi/HbjW9vAf1H8/maxresdefault.jpg', 'http://sites.psu.edu/siowfa14/wp-content/uploads/sites/13467/2014/10/shutterstock_151171667.jpg', 'https://nypost.com/wp-content/uploads/sites/2/2020/10/student-5.jpg?quality=90&strip=all&w=1200', 'https://assets.vogue.com/photos/5891f426153ededd21da505f/master/pass/img-bad-teacher_112037787749.jpg', 'http://ichef.bbci.co.uk/news/976/cpsprodpb/EA2A/production/_84864995_hi028542031.jpg', 'https://images2.storyjumper.com/transcoder.jpg?v=0&coverid=e3c4d4dd9c49f2d842b52c6aca67e0f7&coverpage=front&width=510', 'https://www.hrw.org/sites/default/files/media_2020/09/202009asia_thailand_students.jpg', 'https://cdn.patchcdn.com/users/3500945/stock/T800x600/20150154b6a7061a5d7.jpg', 'https://media.gettyimages.com/photos/junior-school-bad-behaviour-picture-id157529104', 'https://www.telegraph.co.uk/content/dam/education/2017/01/10/54277671studying.jpg?imwidth=680', 'https://mtv.mtvnimages.com/uri/mgid:ao:image:mtv.com:21819?quality=0.8&format=jpg&width=1440&height=810&.jpg', 'http://rlv.zcache.com/there_are_no_bad_teachers_only_bad_students_card-raceeb6fd10d046578179c6da998e1ea8_xvuat_8byvr_512.jpg', 'https://i.ebayimg.com/images/g/ZecAAOSwzaJfD1m~/s-l640.jpg', 'https://www.studypug.com/blog/wp-content/uploads/2016/10/Bad-Grades-in-College.jpg', 'https://www.motherjones.com/wp-content/uploads/BEHAVIOR_C_630.jpg', 'https://cmkt-image-prd.global.ssl.fastly.net/0.1.0/ps/5848861/3005/2001/m1/fpnw/wm0/pupils-in-classroom-1-.jpg?1549620774&s=15e1eb683ea6dbd99d397fdc0f96009d', 'https://www.brookings.edu/wp-content/uploads/2016/06/teacher_denver001.jpg', 'https://media.istockphoto.com/vectors/sad-student-showing-paper-with-f-grade-vector-id501914378?k=6&m=501914378&s=612x612&w=0&h=62GM1MEt4poJfITwa3LlkKkHeWMv3woNu2X8nqezwHw=', 'https://www.metro.us/wp-content/uploads/Reuters_Direct_Media/USOnlineReportWorldNews/tagreuters.com2020binary_LYNXMPEG911AS-BASEIMAGE.jpg', 'https://images.ctfassets.net/iyiurthvosft/featured-img-of-post-175312/bf0a0c2accf05549f4a563358a9472c1/featured-img-of-post-175312.jpg?w=1800&q=50&fm=jpg&fl=progressive', 'https://i.dailymail.co.uk/i/pix/2014/07/10/article-2686853-1F86AF0300000578-59_306x423.jpg', 'https://media.istockphoto.com/vectors/school-problems-sad-child-vector-id477168506?k=6&m=477168506&s=612x612&w=0&h=rk7N-6YKwa_0nqRA86j_4yjJt54ErO0d26z7-E4Pa3k=', 'http://s1.r29static.com/bin/entry/1a1/580x370/1657054/image.png', 'https://tiffanyyong.com/wp-content/uploads/2017/09/bad-genius_poster.jpg', 'https://images.csmonitor.com/csm/2016/02/964927_1_Report+Card_standard.jpg?alias=standard_900x600', 'https://www.nerdwallet.com/assets/blog/wp-content/uploads/2018/11/how-to-get-a-student-loan-with-bad-credit-600x225.jpg', 'https://www.thephuketnews.com/photo/listing/2017/1504090818_1-org.jpg', 'https://media.istockphoto.com/vectors/cartoon-illustration-of-children-fighting-after-school-vector-id186549608', 'https://as1.ftcdn.net/jpg/01/66/76/42/500_F_166764287_gwB9S351sSEn80xIXPUoGrBvTzEXYLd4.jpg', 'https://cdn.newswire.com/files/x/6b/6d/bf3962a5dcedd4583ff13406cd29.jpg', 'https://static.seattletimes.com/wp-content/uploads/2015/05/d59e2f46-f8cd-11e4-b106-bd39ccd745b8-780x520.jpg', 'https://as1.ftcdn.net/jpg/02/86/43/42/500_F_286434255_LFrZ5YBYoltAWdhSTqCygcjSBW1hDDnO.jpg', 'https://gradepowerlearning.com/wp-content/uploads/2017/06/AdobeStock_84965857.jpeg', 'https://blog.schoolspecialty.com/wp-content/uploads/2017/08/Real-Teacher-Tips-for-Anxiety-Free-Classroom-A.jpg', 'https://i.ytimg.com/vi/EdTrQRr2yjw/maxresdefault.jpg', 'https://www.assignmentexpert.com/blog/wp-content/uploads/2021/04/bs.jpg', 'https://thumbs.dreamstime.com/b/bad-student-portrait-books-hand-38491772.jpg', 'https://i.ytimg.com/vi/32D81osekjU/maxresdefault.jpg', 'https://strengthsasia.com/wp-content/uploads/2020/02/no-bad-students-article-scaled.jpg', 'https://is1-ssl.mzstatic.com/image/thumb/Purple114/v4/27/e2/01/27e20153-e285-654e-bb68-6baa70667288/source/512x512bb.jpg', 'https://i.ytimg.com/vi/1KofiHFsBQk/maxresdefault.jpg', 'https://thejournal.com/-/media/EDU/THEJournal/Images/2017/07/20170705hero.jpg']

Images downloaded.

bored student: Got 90 image URLs. Downloading images...

urls = ['https://www.gettingsmart.com/wp-content/uploads/2016/07/Bored-Student-Feature-Image.jpg', 'https://d2rd7etdn93tqb.cloudfront.net/wp-content/uploads/2017/09/bored-student-091417.jpg', 'https://thumbs.dreamstime.com/b/bored-student-desk-classroom-17048939.jpg', 'https://www.oxfordlearning.com/wp-content/uploads/2019/11/child-bored-in-school.jpeg', 'https://www.thoughtco.com/thmb/3M7Z_Ni4FBon3rzHiAmxftCbPKo=/2129x1410/filters:no_upscale():max_bytes(150000):strip_icc()/Bored-570285cb3df78c7d9e6d8fe1.jpg', 'https://thecounselingteacher.com/wp-content/uploads/2018/02/bored-students-1-scaled.jpg', 'https://image.freepik.com/free-photo/bored-student-listening-while-classmate-sleeping-university_107420-1839.jpg', 'https://hpbacademy.org/wp-content/uploads/2017/12/bored_student_app_2.jpg', 'https://thumbs.dreamstime.com/b/bored-student-young-man-books-library-people-knowledge-education-literature-school-concept-dreaming-58170287.jpg', 'https://cdn2.vox-cdn.com/thumbor/V36tgSrPH6AgKu6hAgoOODV0qvU=/cdn0.vox-cdn.com/uploads/chorus_asset/file/676314/shutterstock_160640804.0.jpg', 'https://thumbs.dreamstime.com/b/bored-student-tired-yawning-school-desk-classroom-47201192.jpg', 'https://image.freepik.com/free-photo/bored-students-sitting-lecture-hall_13339-65231.jpg', 'https://images.freeimages.com/images/large-previews/63b/class-full-o-bored-students-1465936.jpg', 'https://thethrivegroup.co/wp-content/uploads/2014/07/bigstock-tired-and-bored-student-22669502.jpg?w=300', 'https://img.freepik.com/free-photo/young-blonde-student-looking-bored-writing_23-2148511048.jpg?size=626&ext=jpg', 'https://i.ytimg.com/vi/rn_M_J1i4hQ/maxresdefault.jpg', 'https://tonyenglish.vn/uploads/news/2019/0106/1546786902_bored-student2.jpg', 'https://thumbs.dreamstime.com/b/bored-student-17626957.jpg', 'https://collegecures.com/wp-content/uploads/2010/12/bored-student.jpeg', 'https://www.continentalpress.com/wp-content/uploads/2019/05/iStock-586199980-1024x683.jpg', 'http://www.maisaprendizagem.com.br/wp-content/uploads/2019/03/Depositphotos_102331804_xl-2015.jpg', 'https://d3i6fh83elv35t.cloudfront.net/static/2019/09/sleeping-student_GettyImages-672317336-1024x683.jpg', 'https://i2.wp.com/www.society19.com/wp-content/uploads/2020/03/bored.png?fit=1000,667&ssl=1', 'https://a57.foxnews.com/a57.foxnews.com/static.foxnews.com/foxnews.com/content/uploads/2018/09/640/320/1862/1048/tired-teenager-istock-large.jpg?ve=1&tl=1?ve=1&tl=1', 'https://media.istockphoto.com/photos/large-group-of-bored-students-at-lecture-hall-picture-id1136569008?s=612x612', 'https://image.freepik.com/free-photo/bored-tired-asian-student-doing-homework-i_34435-2739.jpg', 'https://cf.girlsaskguys.com/a31555/ce55cea2-c3c7-451a-86a1-a6fdfc37ea93.jpg', 'https://image.freepik.com/free-photo/bored-student-boy_102671-6230.jpg', 'https://st3.depositphotos.com/1809585/16096/i/1600/depositphotos_160963300-stock-photo-bored-students-listening-lesson-in.jpg', 'https://weareive.org/wp-content/uploads/2017/10/Bored-Girl-at-School.jpg', 'https://www.greatschools.org/gk/wp-content/uploads/2010/03/Middler-schooler-bored.jpg', 'https://cdn.apexlearning.com/styles/standard_text_column/s3fs/2016-06/Combat+Classroom+Boredom.jpg?itok=OPbDMkVB', 'https://image.freepik.com/free-photo/bored-students-wasting-time_23-2147659213.jpg', 'https://img.freepik.com/free-photo/bored-student-class_23-2147650728.jpg?size=626&ext=jpg', 'https://todaysmama.com/.image/t_share/MTU5OTEwNDM5Nzk4MDU2MzEx/bored-kid.jpg', 'https://i0.wp.com/oupeltglobalblog.com/wp-content/uploads/2020/10/Untitled-design-8.png?fit=1200,675&ssl=1', 'https://www.learningliftoff.com/wp-content/uploads/2015/05/ClassroomBoredom-730x390.jpg', 'https://teach4theheart.com/wp-content/uploads/2018/05/bored-student.jpg', 'http://cdn.24.co.za/files/Cms/General/d/3605/928cc3c007364bada81583953d756204.jpg', 'https://www.saisivahospital.com/wp-content/uploads/2019/04/A-Detailed-Review-Of-Attention-Deficit-Hyperactivity-Disorders-ADHD2-1024x1024.jpg', 'http://www.tricitypsychology.com/blog/wp-content/uploads/2010/03/Bored-Students.jpg', 'https://media.gettyimages.com/photos/elementary-school-student-bored-in-class-picture-id937072968', 'https://mediaproxy.salon.com/width/1200/https://media.salon.com/2013/08/student_asleep.jpg', 'https://thumbs.dreamstime.com/b/bored-student-handsome-male-holding-hand-chin-looking-textbook-young-women-sitting-desk-behind-him-39498902.jpg', 'https://1.bp.blogspot.com/-nDSvslFy6rs/UBLmVHufH2I/AAAAAAAAACo/kgKDpHmQI_4/s1600/A_student_bored_in_class_pe0051394.jpg', 'https://thumbs.dreamstime.com/z/bored-student-28604296.jpg', 'https://onepeterfive.com/wp-content/uploads/2019/09/bored-reading-1024x768.jpg', 'https://naibuzz.com/wp-content/uploads/2015/02/Bored_Boy.jpg', 'https://thumbs.dreamstime.com/b/bored-young-girl-doing-homework-desk-bedroom-55895614.jpg', 'https://images-wixmp-ed30a86b8c4ca887773594c2.wixmp.com/f/cc5de369-4f0b-451e-89cd-b4ee947a9333/d2p39rm-14b487b4-7c98-422f-839e-6e345070bb44.png/v1/fill/w_1280,h_930,q_80,strp/bored_student_by_woodsman819_d2p39rm-fullview.jpg?token=eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJzdWIiOiJ1cm46YXBwOjdlMGQxODg5ODIyNjQzNzNhNWYwZDQxNWVhMGQyNmUwIiwiaXNzIjoidXJuOmFwcDo3ZTBkMTg4OTgyMjY0MzczYTVmMGQ0MTVlYTBkMjZlMCIsIm9iaiI6W1t7ImhlaWdodCI6Ijw9OTMwIiwicGF0aCI6IlwvZlwvY2M1ZGUzNjktNGYwYi00NTFlLTg5Y2QtYjRlZTk0N2E5MzMzXC9kMnAzOXJtLTE0YjQ4N2I0LTdjOTgtNDIyZi04MzllLTZlMzQ1MDcwYmI0NC5wbmciLCJ3aWR0aCI6Ijw9MTI4MCJ9XV0sImF1ZCI6WyJ1cm46c2VydmljZTppbWFnZS5vcGVyYXRpb25zIl19.dUgmn0h3brM_Mx6OkafoJjufWse9DSYFSfpNjYfAVyA', 'https://media.istockphoto.com/photos/today-i-am-not-interested-in-this-lesson-picture-id480511610?k=6&m=480511610&s=612x612&w=0&h=DCAAK0RsN_hGyYQoYjGrlHroCVjen3swt-ms4TKoHRs=', 'https://blog.echo360.com/hs-fs/hubfs/Bored_Students.jpg?width=424&name=Bored_Students.jpg', 'https://st.depositphotos.com/1010613/4367/i/950/depositphotos_43675343-stock-photo-bored-student-with-classmates-sleeping.jpg', 'https://static1.bigstockphoto.com/1/9/3/large1500/3917143.jpg', 'https://media.istockphoto.com/photos/bored-student-picture-id184941500', 'https://thumbs.dreamstime.com/b/bored-students-sitting-lesson-tired-adult-classroom-60139426.jpg', 'https://media.gettyimages.com/photos/bored-student-text-messaging-on-cell-phone-during-a-lecture-picture-id485383602', 'https://www.17000kmaway.com/wp-content/uploads/2020/08/New-Educational-Digital-Studying-Tools-Will-Sense-Student-Boredom-as-well-as-other-Emotions1.jpg', 'http://www.listenandlearn.org/the-teachers-handbook/wp-content/uploads/2013/12/SuperStock_1829-14303.jpg', 'https://thumbs.dreamstime.com/z/bored-student-school-desk-white-background-35592704.jpg', 'https://thumbs.dreamstime.com/b/bored-student-listening-to-teacher-amphitheater-facial-expression-197260379.jpg', 'http://boredomlab.org/wp-content/uploads/2020/04/bored-students.jpg', 'https://thumbs.dreamstime.com/b/bored-student-headphones-books-white-background-51451279.jpg', 'https://www.understandingboys.com.au/wp-content/uploads/2017/07/post/iStock-579120260-2121x1414.jpg', 'https://thumbs.dreamstime.com/z/bored-student-17617534.jpg', 'https://thumbs.dreamstime.com/b/bored-student-14686534.jpg', 'https://thumbs.dreamstime.com/z/bored-college-student-stack-books-29648866.jpg', 'https://pubsonline.informs.org/do/10.1287/orms.2019.05.14/full/Classroom.png', 'https://thumbs.dreamstime.com/z/bored-student-young-man-day-dreaming-resting-his-bench-73481076.jpg', 'https://media.istockphoto.com/photos/bored-student-picture-id139873494', 'https://soundasleeplab.com/wp-content/uploads/tired_teen-scaled.jpg', 'https://thumbs.dreamstime.com/b/bored-teenage-student-girl-studying-home-looking-away-42704905.jpg', 'https://thumbs.dreamstime.com/b/bored-student-young-woman-books-library-people-knowledge-education-literature-school-concept-girl-dreaming-66354143.jpg', 'https://thumbs.dreamstime.com/b/bored-student-21495229.jpg', 'https://thumbs.dreamstime.com/b/young-man-student-feel-bored-trying-to-studying-entry-exams-to-university-college-bored-student-books-library-114178119.jpg', 'https://media.istockphoto.com/photos/bored-and-unchallenged-student-picture-id154891368', 'https://images.squarespace-cdn.com/content/v1/56c7e79d8a65e2903b649d12/1484614540398-7CEF5RQFDBE0J5H9GX67/image-asset.jpeg', 'https://thumbs.dreamstime.com/b/bored-teenage-student-using-laptop-sitting-outside-steps-41020137.jpg', 'https://thumbs.dreamstime.com/b/classes-1098108.jpg', 'http://urbanatural.in/tips/wp-content/uploads/2016/07/Children_Bored.jpg', 'https://c8.alamy.com/comp/C244XD/bored-male-student-during-an-university-lesson-C244XD.jpg', 'https://www.sacbee.com/news/business/b23jyx/picture121926253/alternates/LANDSCAPE_1140/Bored+student+1220', 'https://thumbs.dreamstime.com/z/bored-student-17139310.jpg', 'https://www.pinclipart.com/picdir/big/64-643802_picture-royalty-free-download-cartoon-boredom-clip-bored.png', 'https://images.theconversation.com/files/364650/original/file-20201021-23-uo30p4.jpg?ixlib=rb-1.1.0&q=15&auto=format&w=600&h=400&fit=crop&dpr=3', 'https://thumbs.dreamstime.com/z/bored-student-girl-doing-her-homework-table-135403263.jpg', 'https://www.shift.is/wp-content/uploads/2020/11/bored-students-2-630x420.jpg', 'https://headinthesandblog.org/wp-content/uploads/2016/06/bored-with-school-via-salon.com_.jpg', 'https://www.demcointeriors.com/wp-content/uploads/2017/07/iStock-586199980-1024x683.jpg', 'https://media.gettyimages.com/photos/above-view-of-bored-students-in-the-classroom-picture-id626385588?s=612x612']

Images downloaded.

Doing some more setting up & checking on the downloaded files...

Fns = [Path('scraped_images/bad/Bad-Grades-in-College.jpg'), Path('scraped_images/bad/bad-student-learning-with-difficulties-picture-id622182790.jpg'), Path('scraped_images/bad/GettyImages-157672984-59cff07e685fbe0011ebe775.jpg'), Path('scraped_images/bad/254ae95072c8229452084a6f92a30374cb547ad4.jpg'), Path('scraped_images/bad/there_are_no_bad_teachers_only_bad_students_card-raceeb6fd10d046578179c6da998e1ea8_xvuat_8byvr_512.jpg'), Path('scraped_images/bad/s-l640.jpg'), Path('scraped_images/bad/8432644._UY630_SR1200,630_.jpg'), Path('scraped_images/bad/how-to-get-a-student-loan-with-bad-credit-600x225.jpg'), Path('scraped_images/bad/AdobeStock_84965857.jpeg'), Path('scraped_images/bad/bad-student-portrait-books-hand-38491772.jpg'), Path('scraped_images/bad/article-1330868-0B78415F000005DC-347_468x459.jpg'), Path('scraped_images/bad/bad-student-behavior-enews.jpg'), Path('scraped_images/bad/bd0b7c4a-f8cd-11e4-b106-bd39ccd745b8-1560x1094.jpg'), Path('scraped_images/bad/bf3962a5dcedd4583ff13406cd29.jpg'), Path('scraped_images/bad/badteaching-56f36554c33013905b35f046fadd87cd3f921f56.jpg'), Path('scraped_images/bad/teacher-checking-sleeping-student-for-a-pulse-picture-id123466509.jpg'), Path('scraped_images/bad/sad-student-looking-at-test-paper-with-bad-grade-vector-id944750686.jpg'), Path('scraped_images/bad/guilty-student-boy-and-teacher-vector-id636071146.jpg'), Path('scraped_images/bad/school-bad-behaviour-vector-id894983104.jpg'), Path('scraped_images/bad/51FLJ0-jPFL._SY291_BO1,204,203,200_QL40_.jpg'), Path('scraped_images/bad/sad-student-looking-at-test-paper-with-bad-mark-vector-13755837.jpg'), Path('scraped_images/bad/bad-teacher-straightfromthea.gif'), Path('scraped_images/bad/shutterstock_151171667.jpg'), Path('scraped_images/bad/t1larg.bad.student.writing.jpg'), Path('scraped_images/bad/bad-student-character-vector-flat-cartoon-illustration-vector-id586192316.jpg'), Path('scraped_images/bad/no-bad-students-article-scaled.jpg'), Path('scraped_images/bad/featured-img-of-post-175312.jpg'), Path('scraped_images/bad/bs.jpg'), Path('scraped_images/bad/500_F_286434255_LFrZ5YBYoltAWdhSTqCygcjSBW1hDDnO.jpg'), Path('scraped_images/bad/1504090818_1-org.jpg'), Path('scraped_images/bad/teacher_helping_kids-750x403.jpg'), Path('scraped_images/bad/maxresdefault.jpg'), Path('scraped_images/bad/school-problems-sad-child-vector-id477168506.jpg'), Path('scraped_images/bad/_95292490_classroombadbehaviourthinkstock.jpg'), Path('scraped_images/bad/sad-student-looking-at-test-paper-with-bad-grade-vector-20426266.jpg'), Path('scraped_images/bad/School.Behavior.Improve_listening_skills_in_ADHD_kids.Article.5934A.girls_passing_notes.ts_77137314-3.jpg'), Path('scraped_images/bad/202009asia_thailand_students.jpg'), Path('scraped_images/bad/bad-student-vector-id507490311.jpg'), Path('scraped_images/bad/student_getting_bad_result.jpg'), Path('scraped_images/bad/maxresdefault1.jpg'), Path('scraped_images/bad/Untitled4.jpg'), Path('scraped_images/bad/image-20150825-15912-vnio5s.jpg'), Path('scraped_images/bad/512x512bb.jpg'), Path('scraped_images/bad/20mon2web-videoSixteenByNineJumbo1600.jpg'), Path('scraped_images/bad/sad-student-showing-paper-with-f-grade-vector-id501914378.jpg'), Path('scraped_images/bad/BEHAVIOR_C_630.jpg'), Path('scraped_images/bad/_84864995_hi028542031.jpg'), Path('scraped_images/bad/teacher_denver001.jpg'), Path('scraped_images/bad/Fotolia_61768987_Subscription_Monthly_M-1200x800.jpg'), Path('scraped_images/bad/Bad-classroom-750x325.jpg'), Path('scraped_images/bad/studentbehavingbadly900-gettyimages-472977148.jpg'), Path('scraped_images/bad/img-bad-teacher_112037787749.jpg'), Path('scraped_images/bad/teacher-reprimanding-student.jpg'), Path('scraped_images/bad/cartoon-illustration-of-children-fighting-after-school-vector-id186549608.jpg'), Path('scraped_images/bad/article-1361289-0B00E071000005DC-550_468x317.jpg'), Path('scraped_images/bad/student-5.jpg'), Path('scraped_images/bad/shutterstock_160640804.0.jpg'), Path('scraped_images/bad/badclassroom.jpg'), Path('scraped_images/bad/blog-motivate-students-without-feeling-like-bad-guy.png'), Path('scraped_images/bad/article-2686853-1F86AF0300000578-59_306x423.jpg'), Path('scraped_images/bad/24BADTEACHER1-videoSixteenByNine1050.jpg'), Path('scraped_images/bad/20170705hero.jpg'), Path('scraped_images/bad/d59e2f46-f8cd-11e4-b106-bd39ccd745b8-780x520.jpg'), Path('scraped_images/bad/videoblocks-a-bad-student-is-playing-with-smartphone-during-class-under-the-desk-hiding-from-the-teacher-the-teacher-observes-how-the-student-is-note-taking-lecture-plays-on-a-smartphone_shbxa4h1e_thumbnail-1080_01.png'), Path('scraped_images/bad/shutterstock_1182248935.jpg'), Path('scraped_images/bad/1B5931659-g-tmoms-130208-boy-student.jpg'), Path('scraped_images/bad/o-BADCLASSROOMHABITS-facebook.jpg'), Path('scraped_images/bad/image.png'), Path('scraped_images/bad/Real-Teacher-Tips-for-Anxiety-Free-Classroom-A.jpg'), Path('scraped_images/bad/bad-vs-good-photos-640x384.png'), Path('scraped_images/bad/bad-genius_poster.jpg'), Path('scraped_images/bad/maxresdefault12.jpg'), Path('scraped_images/bad/011513lunchlab_1280x720.jpg'), Path('scraped_images/bad/time-management-thenurselink-1024x684-1.jpg'), Path('scraped_images/bad/o-BAD-STUDENT-facebook.jpg'), Path('scraped_images/bad/20150154b6a7061a5d7.jpg'), Path('scraped_images/bad/54277671studying.jpg'), Path('scraped_images/bad/junior-school-bad-behaviour-picture-id157529104.jpg'), Path('scraped_images/bad/o-DV1644042-facebook.jpg'), Path('scraped_images/bad/500_F_166764287_gwB9S351sSEn80xIXPUoGrBvTzEXYLd4.jpg'), Path('scraped_images/bored/bored-student-young-woman-books-library-people-knowledge-education-literature-school-concept-girl-dreaming-66354143.jpg'), Path('scraped_images/bored/bored-young-girl-doing-homework-desk-bedroom-55895614.jpg'), Path('scraped_images/bored/bored-student-14686534.jpg'), Path('scraped_images/bored/ce55cea2-c3c7-451a-86a1-a6fdfc37ea93.jpg'), Path('scraped_images/bored/ClassroomBoredom-730x390.jpg'), Path('scraped_images/bored/bored-reading-1024x768.jpg'), Path('scraped_images/bored/iStock-579120260-2121x1414.jpg'), Path('scraped_images/bored/New-Educational-Digital-Studying-Tools-Will-Sense-Student-Boredom-as-well-as-other-Emotions1.jpg'), Path('scraped_images/bored/student_asleep.jpg'), Path('scraped_images/bored/bored-teenage-student-girl-studying-home-looking-away-42704905.jpg'), Path('scraped_images/bored/Bored-Students.jpg'), Path('scraped_images/bored/bored-student-28604296.jpg'), Path('scraped_images/bored/bored-college-student-stack-books-29648866.jpg'), Path('scraped_images/bored/tired_teen-scaled.jpg'), Path('scraped_images/bored/bored-student-21495229.jpg'), Path('scraped_images/bored/large-group-of-bored-students-at-lecture-hall-picture-id1136569008.jpg'), Path('scraped_images/bored/bored-student-17626957.jpg'), Path('scraped_images/bored/bored-student-text-messaging-on-cell-phone-during-a-lecture-picture-id485383602.jpg'), Path('scraped_images/bored/bored-student-picture-id184941500.jpg'), Path('scraped_images/bored/bored-student.jpeg'), Path('scraped_images/bored/above-view-of-bored-students-in-the-classroom-picture-id626385588.jpg'), Path('scraped_images/bored/bored-student-young-man-books-library-people-knowledge-education-literature-school-concept-dreaming-58170287.jpg'), Path('scraped_images/bored/bored-student-school-desk-white-background-35592704.jpg'), Path('scraped_images/bored/bored_student_app_2.jpg'), Path('scraped_images/bored/bored-student-handsome-male-holding-hand-chin-looking-textbook-young-women-sitting-desk-behind-him-39498902.jpg'), Path('scraped_images/bored/bored-student.jpg'), Path('scraped_images/bored/today-i-am-not-interested-in-this-lesson-picture-id480511610.jpg'), Path('scraped_images/bored/bored-student-listening-to-teacher-amphitheater-facial-expression-197260379.jpg'), Path('scraped_images/bored/depositphotos_43675343-stock-photo-bored-student-with-classmates-sleeping.jpg'), Path('scraped_images/bored/bored-students-2-630x420.jpg'), Path('scraped_images/bored/Bored-Girl-at-School.jpg'), Path('scraped_images/bored/SuperStock_1829-14303.jpg'), Path('scraped_images/bored/child-bored-in-school.jpeg'), Path('scraped_images/bored/iStock-586199980-1024x683.jpg'), Path('scraped_images/bored/bored-and-unchallenged-student-picture-id154891368.jpg'), Path('scraped_images/bored/bored.png'), Path('scraped_images/bored/bored-student-headphones-books-white-background-51451279.jpg'), Path('scraped_images/bored/maxresdefault.jpg'), Path('scraped_images/bored/Bored-570285cb3df78c7d9e6d8fe1.jpg'), Path('scraped_images/bored/class-full-o-bored-students-1465936.jpg'), Path('scraped_images/bored/bored-student-tired-yawning-school-desk-classroom-47201192.jpg'), Path('scraped_images/bored/bigstock-tired-and-bored-student-22669502.jpg'), Path('scraped_images/bored/bored-students.jpg'), Path('scraped_images/bored/64-643802_picture-royalty-free-download-cartoon-boredom-clip-bored.png'), Path('scraped_images/bored/young-man-student-feel-bored-trying-to-studying-entry-exams-to-university-college-bored-student-books-library-114178119.jpg'), Path('scraped_images/bored/bored-students-sitting-lesson-tired-adult-classroom-60139426.jpg'), Path('scraped_images/bored/1546786902_bored-student2.jpg'), Path('scraped_images/bored/elementary-school-student-bored-in-class-picture-id937072968.jpg'), Path('scraped_images/bored/bored-student-girl-doing-her-homework-table-135403263.jpg'), Path('scraped_images/bored/bored-student-17139310.jpg'), Path('scraped_images/bored/A-Detailed-Review-Of-Attention-Deficit-Hyperactivity-Disorders-ADHD2-1024x1024.jpg'), Path('scraped_images/bored/bored-student-17617534.jpg'), Path('scraped_images/bored/Children_Bored.jpg'), Path('scraped_images/bored/Depositphotos_102331804_xl-2015.jpg'), Path('scraped_images/bored/sleeping-student_GettyImages-672317336-1024x683.jpg'), Path('scraped_images/bored/image-asset.jpeg'), Path('scraped_images/bored/bored-students-1-scaled.jpg'), Path('scraped_images/bored/file-20201021-23-uo30p4.jpg'), Path('scraped_images/bored/A_student_bored_in_class_pe0051394.jpg'), Path('scraped_images/bored/shutterstock_160640804.0.jpg'), Path('scraped_images/bored/bored-male-student-during-an-university-lesson-C244XD.jpg'), Path('scraped_images/bored/bored-kid.jpg'), Path('scraped_images/bored/depositphotos_160963300-stock-photo-bored-students-listening-lesson-in.jpg'), Path('scraped_images/bored/bored-student-young-man-day-dreaming-resting-his-bench-73481076.jpg'), Path('scraped_images/bored/tired-teenager-istock-large.jpg'), Path('scraped_images/bored/Middler-schooler-bored.jpg'), Path('scraped_images/bored/bored-student-picture-id139873494.jpg'), Path('scraped_images/bored/bored-student-desk-classroom-17048939.jpg'), Path('scraped_images/bored/3917143.jpg'), Path('scraped_images/bored/bored-student-091417.jpg'), Path('scraped_images/bored/bored-with-school-via-salon.com_.jpg'), Path('scraped_images/bored/Bored-Student-Feature-Image.jpg'), Path('scraped_images/bored/Bored_Students.jpg'), Path('scraped_images/bored/classes-1098108.jpg'), Path('scraped_images/bored/iStock-586199980-1024x6831.jpg'), Path('scraped_images/bored/bored-teenage-student-using-laptop-sitting-outside-steps-41020137.jpg'), Path('scraped_images/bored/Bored_Boy.jpg'), Path('scraped_images/good/college-students.jpg'), Path('scraped_images/good/good-students.jpg'), Path('scraped_images/good/sermon_7.jpg'), Path('scraped_images/good/1181304-Marva-Collins-Quote-The-good-teacher-makes-the-poor-student-good.jpg'), Path('scraped_images/good/iStock-610839440.jpg'), Path('scraped_images/good/55a731e081df0ad79b34d5f979d74993.jpg'), Path('scraped_images/good/Pretty_Good_Student.jpg'), Path('scraped_images/good/thesuccessfulstudent.gif'), Path('scraped_images/good/group-of-school-kids-raising-hands-in-classroom-860597978-5ab59c403418c600365665f3.jpg'), Path('scraped_images/good/2351588-Marva-Collins-Quote-The-good-teacher-makes-the-poor-student-good.jpg'), Path('scraped_images/good/slide_9.jpg'), Path('scraped_images/good/eDS1jHpHu6_1401974637587.jpg'), Path('scraped_images/good/Mature-students.jpg'), Path('scraped_images/good/reportcardsmall-300x200.jpg'), Path('scraped_images/good/Successful-Students-1.jpg'), Path('scraped_images/good/Qualities-of-a-Good-Student-800x500.png'), Path('scraped_images/good/five-qualities-of-successful-students.jpg'), Path('scraped_images/good/studying1.jpg'), Path('scraped_images/good/p194462_v_h10_ac.jpg'), Path('scraped_images/good/good-student-smiling-schoolgirl-engaged-table-books-isolated-over-white-40585265.jpg'), Path('scraped_images/good/4749463-Marva-Collins-Quote-The-good-teacher-makes-the-poor-student-good.jpg'), Path('scraped_images/good/08142020-Students-940x529.jpg'), Path('scraped_images/good/a-good-student-is-special.jpg'), Path('scraped_images/good/c72a35d4f0af956c649948d7f20e4686.jpg'), Path('scraped_images/good/Blog-Thumbnail182.jpg'), Path('scraped_images/good/Qualities-of-A-Good-Student-1.jpg'), Path('scraped_images/good/AdobeStock_132869690-1024x683.jpeg'), Path('scraped_images/good/good-student-9799619.jpg'), Path('scraped_images/good/dv740020.jpg'), Path('scraped_images/good/maxresdefault1234.jpg'), Path('scraped_images/good/iStock-1031377384-1536x1024.jpg'), Path('scraped_images/good/perfect-student-characteristics-4148286_final-ab0f5265c6574d66bfc368c627956530.png'), Path('scraped_images/good/classroom-Copy.jpg'), Path('scraped_images/good/cm1225_WM_THUMB_Seven_ways_to_be_a_good_student__94274.1493678783.1280.1280.jpg'), Path('scraped_images/good/hqdefault.jpg'), Path('scraped_images/good/QUALITIES-OF-A-GOOD-STUDENT.jpg'), Path('scraped_images/good/maxresdefault.jpg'), Path('scraped_images/good/10-ways-354x500.png'), Path('scraped_images/good/Qualities-Of-A-Good-Student-1-1.png'), Path('scraped_images/good/7habits1.jpg'), Path('scraped_images/good/a-good-student-is-one-who-will-teach-you-something-quote-1.jpg'), Path('scraped_images/good/Thrive-During-Finals-.jpg'), Path('scraped_images/good/maxresdefault1.jpg'), Path('scraped_images/good/good-student-1-728.jpg'), Path('scraped_images/good/student-1.jpg'), Path('scraped_images/good/df3cb8181c9429f40a14d4e1148dd3ba.jpg'), Path('scraped_images/good/CD-114249_L.jpg'), Path('scraped_images/good/o5ASl6gOpO9zCHU42vdFOoExBGo.jpg'), Path('scraped_images/good/are_you_a_good_student_featured.jpg'), Path('scraped_images/good/Depositphotos_24196475_l-2015.jpg'), Path('scraped_images/good/pexels-katerina-holmes-5905557-scaled.jpg'), Path('scraped_images/good/how-to-be-a-good-student.png'), Path('scraped_images/good/iStock-480507030.jpg'), Path('scraped_images/good/good-student-portrait-smart-ten-years-girl-big-glasses-book-isolated-over-white-35904103.jpg'), Path('scraped_images/good/STUDY_STUDENTS_WITH_GOOD_GRADES_ARE_MORE_POPULAR_SECURE.jpg'), Path('scraped_images/good/shutterstock_102482084-e1552480105382.jpg'), Path('scraped_images/good/happy_math_student.jpg'), Path('scraped_images/good/png-transparent-student-school-star-teacher-good-student-s-text-class-logo.png'), Path('scraped_images/good/A+GOOD+STUDENT.jpg'), Path('scraped_images/good/1347467411_untitled-1.png'), Path('scraped_images/good/Students.jpg'), Path('scraped_images/good/Steps-To-Becoming-A-Good-Student-o3schools.jpg'), Path('scraped_images/good/4e2d70acff6d2e0be9d0b8c75579ec6f.png'), Path('scraped_images/good/young-good-student-school-girl-writing-essay-lesson-pretty-girl-trying-to-be-72610101.jpg'), Path('scraped_images/good/imageForSharing.jpg'), Path('scraped_images/good/studying-student-on-desk.jpg'), Path('scraped_images/good/good-student-picture-id143922844.jpg'), Path('scraped_images/good/10-Qualities-of-a-Good-Student-1.jpg'), Path('scraped_images/good/uOmBrbZBABF7G3F0QaaJJURJGCw.jpg'), Path('scraped_images/good/maxresdefault123.jpg'), Path('scraped_images/good/iStock_28423686_MEDIUM.jpg'), Path('scraped_images/good/maxresdefault123456.jpg'), Path('scraped_images/good/high-school-grades.jpg'), Path('scraped_images/good/Windom011.jpg'), Path('scraped_images/good/good-student.jpg'), Path('scraped_images/good/Studying.jpg'), Path('scraped_images/good/How_to_Get_Good_Grades_in_High_School.jpg'), Path('scraped_images/good/to-be-an-ideal-student.jpg'), Path('scraped_images/good/maxresdefault12.jpg'), Path('scraped_images/good/100602603.jpg'), Path('scraped_images/good/2018-03-IRS-Healthy-Schools.jpg'), Path('scraped_images/good/good-student-at-home.jpg'), Path('scraped_images/good/10-Lines-about-Ideal-Student.png'), Path('scraped_images/good/blog+14.jpg'), Path('scraped_images/good/page_1.jpg'), Path('scraped_images/good/maxresdefault12345.jpg')]

After checking images for issues, 243 (total) images remain.We’re going to use FastAI for our Deep Learning model’s training loop. There are a few ways to specify data in FastAI and to create “DataLoaders” (abbreviated in code as “dls).

Let’s set up a data loader for our images of “good [student]”, “bad [student]”, and “bored [student]” — knowing full well that these are going to be really problematic and maybe even unusable. (How could anybody hope to capture the idea of “good student” in a single image?? But we’re doing it anyway, because someone else will be making such an app.) In fact, let’s take a look at some sample images:

(Don’t worry about the specifics of the following code block for now, we’ll come back later in the course and talk about it.)

Load the dataset

# Don't worry about the specifics of this code just yet. We'll talk about it later in the course.

# In this lesson, we're essentially going to be following "Chapter 2: Production" from the FastAI book:

# https://github.com/fastai/fastbook/blob/master/02_production.ipynb

def setup_dls(

path # string specifying top level of directory/folder tree where images are stored.

):

"Setup FastAI DataLoaders"

data = DataBlock( # define fastai data structure

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=parent_label,

item_tfms=RandomResizedCrop(224, min_scale=0.5),

batch_tfms=aug_transforms())

dls = data.dataloaders(path) # define dataloader(s)

return dls

dls = setup_dls(path)Let’s take a look at some of the images:

print("Types of Students (good, bad, bored):")

dls.show_batch()Types of Students (good, bad, bored):

…And remember, bored students mean we fire the teacher! You’re not bored are you? Don’t answer.

# Note: you can run show_batch() over & over, & it will grab a different set each time:

dls.show_batch()

# yikes,looks we got cartoon images mixed in. hmmmm

…Ok, so you’re probably noticing that scraping from the internet doesn’t guarantee quality data, right? Some of the images don’t even show human beings. Depending on when you ran the scraping code, you might see cartoons, or…lots of things.

You’re probably also noticing that maybe the task we’re trying to perform isn’t really even a meaningful one, but that will not dissuade us in our ethically-challenged app development!

Clean the Data – First?

We’re going to want to “clean” the data. But rather than cleaning the data immediately, “from scratch”, we actually will (as the FastAI authors recommend) train our model first anyway, and then use the model to help us go back and sift through which images are causing problems.

Terminology Interlude

What we did above was actually create two datasets, which are subsets of our total datasets. One subset is called the Training dataset (which FastAI abbreviates as Train) and this is the one that the neural network uses for training. The other subset is called the Validation set (which FastAI abbreviates as Valid) and is “held out” and used to monitor how well the model is “generalizing” for data it’s never seen before.

- Training set: Subset of total data, usually approximately 80% of the total dataset. This is the only part the model “trains” on.

- Validation set: Held-out subset, usually approximaately 20% of total data. Used for monitoring, i.e. for metrics such as accuracy & error rate (defined below). Useful to providing some indication of how well the model generalizes.

- Accuracy: The percentage of correct predictions (using only the Validation set) made by the model.

- Error rate: Same information as the Accuracy, just 100%-Accuracy.

- Loss: A function that measures “how far off” we are in our predictions compared to the “correct” answer (i.e. the labels). Training consists in trying to lower the loss by a series of tiny adjustments to the model. Unlike Accuracy, Loss needs to be a continuous function of the mdoel & the inputs. Training loss (i.e. loss on the Training set) is pretty much guaranteed to always decrease, whereas the true measure of how well the training is going is whether the Valid

- Link to course Glossary.

There are nice graphical ways to envision Accuracy & Loss, which we’ll look at after we try training (below).

Train the Model

We’re going to use FastAI to train our model with just a couple lines of code, also using my “triangle diagram” visualization from mrspuff.viz so that we can track the progress of the model’s training.

In the following, if you mouse-over the “dots” you’ll see a thumbnail of images from the Validation set. The color shows the how the image was labeled (blue=“good”, red=“bad”, green=“bored”) whereas the region of the diagram it appears in indicates what the model predicts for that image.

Note: if you get prompted to “Connect your Google Drive,” say yes.

learn = cnn_learner(dls, resnet34, metrics=[accuracy, error_rate])

learn.fine_tune(6, cbs=mrspuff.viz.VizPreds) # fine_tune does 1 epoch 'frozen', then as many more epochs you specify/usr/local/lib/python3.10/dist-packages/fastai/vision/learner.py:288: UserWarning: `cnn_learner` has been renamed to `vision_learner` -- please update your code

warn("`cnn_learner` has been renamed to `vision_learner` -- please update your code")

/usr/local/lib/python3.10/dist-packages/torchvision/models/_utils.py:208: UserWarning: The parameter 'pretrained' is deprecated since 0.13 and may be removed in the future, please use 'weights' instead.

warnings.warn(

/usr/local/lib/python3.10/dist-packages/torchvision/models/_utils.py:223: UserWarning: Arguments other than a weight enum or `None` for 'weights' are deprecated since 0.13 and may be removed in the future. The current behavior is equivalent to passing `weights=ResNet34_Weights.IMAGENET1K_V1`. You can also use `weights=ResNet34_Weights.DEFAULT` to get the most up-to-date weights.

warnings.warn(msg)

Downloading: "https://download.pytorch.org/models/resnet34-b627a593.pth" to /root/.cache/torch/hub/checkpoints/resnet34-b627a593.pth

100%|██████████| 83.3M/83.3M [00:00<00:00, 239MB/s]Generating (URLS of) thumbnail images...

Mounted at /gdrive

Thumbnails saved to Google Drive in /gdrive/My Drive/scraped_images_thumbs/

Waiting on Google Drive until URLs are ready.

| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 1.943105 | 3.541800 | 0.395833 | 0.604167 | 00:10 |

Epoch 0: Plotting 48 (= 48?) points:| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 1.504644 | 1.940796 | 0.437500 | 0.562500 | 00:09 |

| 1 | 1.416114 | 1.330883 | 0.562500 | 0.437500 | 00:06 |

| 2 | 1.267659 | 1.174310 | 0.625000 | 0.375000 | 00:08 |

| 3 | 1.129715 | 1.196814 | 0.604167 | 0.395833 | 00:07 |

| 4 | 1.009037 | 1.155359 | 0.625000 | 0.375000 | 00:07 |

| 5 | 0.905851 | 1.120701 | 0.625000 | 0.375000 | 00:07 |

Epoch 0: Plotting 48 (= 48?) points:Epoch 1: Plotting 48 (= 48?) points:Epoch 2: Plotting 48 (= 48?) points:Epoch 3: Plotting 48 (= 48?) points:Epoch 4: Plotting 48 (= 48?) points:Epoch 5: Plotting 48 (= 48?) points:…Ok, so it starts off a complete jumble, and then…gets a bit better. Note that the “dots” you see above are only from the Validation set, so that we can really see how well the model is “learning”. If we showed the Training set, there’s a chance that the model could “cheat” by “memorizing” the training points, a process often described by the term “overfitting”.

Hey: if you’d like to see a “cleaner” example of this using animals instead of people, see my blog post from June 2021.

Now that you’ve seen these diagrams, lets use them as examples for visualizing Accuracy, and its relation to loss. Accuracy is how many of the dots end up the region matching their label, i.e. on the correct side of the dividing line:

So that was 100% accuracy, but if the predicted points were juuuust barely on the other side of the decision boundaries, the Loss would be nearly the same as before (because loss is a measure of “distance” from the intended label) but the accuracy would drop to 0:

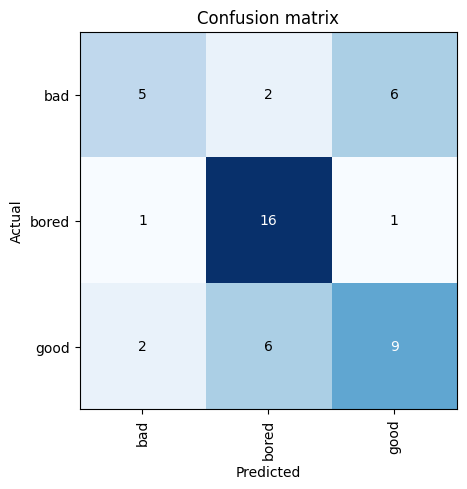

Here’s a different way to look at how many predictions the model gets “right” (according our labels from the search engine) or wrong, called a “Confusion Matrix”, which displays the same information as above but in tabular form:

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

Where again, this is only for the Validation data, since the Training data is already “learned” by the model.

Lets take a look some of the images that produce the “top losses”; these will be the ones most “distant” from their intended triangle-vertex in the triangle diagram:

interp.plot_top_losses(6, nrows=1)

Clean the dataset

How can we improve the dataset? Perhaps there are images that you think are mislabeled, or should be deleted entirely. For example, if we eventually want to hook this up to a webcam, how useful are cartoons going to be? For that matter, how useful are images of multiple students in a classroom going to be? (Probably not very.)

FastAI has a “widget” utility to go through and edit the labels for either the Train[ing] or Valid[ation] datasets. Go through it and delete all the cartoons and any other images you think don’t belong:

# Note it doesn't always show the whole dataset, so you might re-run this a few times

cleaner = ImageClassifierCleaner(learn, max_n=60)

cleaner…What you do above doesn’t actually take effect until you run the following snippet of code:

# this code actually executes your cleaning operations

for idx in cleaner.delete(): cleaner.fns[idx].unlink()

for idx,cat in cleaner.change(): shutil.move(str(cleaner.fns[idx]), path/cat)

# What that did was actually unlink/delete files "on the disk".

# So after that, we need to re-load the data:

dls = setup_dls(path) # load new dls from disk...uh...note that this remixes Train/Valid though :-/

learn.dls = dls # replace the learner's dls with the newly-loaded one

# How many files are left now?

!ls -1 scraped_images/* | wc -l

# Now you can go back up and re-run the Cleaner to take another look249Spend some time cleaning the dataset, iterating the previous two code cells. Don’t forget to work on both Train and Valid[ation] sets!

Retrain, rinse, repeat

So with notebooks like Colab, it’d be easiest to just scroll up and re-run the training loop using this new dls. But for comparison’s sake, I’ll re-type the training code below…

learn = cnn_learner(dls, resnet34, metrics=[accuracy, error_rate]) # reset the learner

learn.fine_tune(6, cbs=mrspuff.viz.VizPreds)Generating (URLS of) thumbnail images...

Drive already mounted at /gdrive; to attempt to forcibly remount, call drive.mount("/gdrive", force_remount=True).

Thumbnails saved to Google Drive in /gdrive/My Drive/scraped_images_thumbs/

Waiting on Google Drive until URLs are ready.

| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 1.965557 | 2.528245 | 0.395833 | 0.604167 | 00:07 |

Epoch 0: Plotting 48 (= 48?) points:| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 1.475666 | 1.753944 | 0.437500 | 0.562500 | 00:08 |

| 1 | 1.395235 | 1.199540 | 0.604167 | 0.395833 | 00:08 |

| 2 | 1.235018 | 1.214119 | 0.645833 | 0.354167 | 00:07 |

| 3 | 1.096986 | 1.275572 | 0.645833 | 0.354167 | 00:08 |

| 4 | 0.979683 | 1.309466 | 0.687500 | 0.312500 | 00:07 |

| 5 | 0.880683 | 1.317681 | 0.666667 | 0.333333 | 00:09 |

Epoch 0: Plotting 48 (= 48?) points:Epoch 1: Plotting 48 (= 48?) points:Epoch 2: Plotting 48 (= 48?) points:Epoch 3: Plotting 48 (= 48?) points:Epoch 4: Plotting 48 (= 48?) points:Epoch 5: Plotting 48 (= 48?) points:Keep cleaning the dataset and see if you can get the accuracy up to at least..oh…let’s shoot for 75%. Surely some school system would buy our product if it’s at least 75% accurate, right? ;-) THIS WILL PROBABLY TAKE YOU AN HOUR of data-cleaning. Cleaning is what Data Scientists and Data Engineers spend a lot of time doing.

And the important point is, people may use something like this for hiring decisions, or governments for guessing who’s a terrorist, or your local convenience store to predict whether you look like the sort of person who’s likely to shoplift and if should we notify security to follow you around… You name it.

Students: Stop here for now. Still working on what follows.

Part 2: Salience: What’s the model looking at?

What are the features of the images that are leading to different classification “conclusions”? Maybe things like smiling for “good”, or a hand touching the head for “bored”? We can send in an image and then look at the internal activations of the model with respect to the 3 different class outcomes.

One package I love for salience is Misa Ogura’s flashtorch, but it relies on an old version of PyTorch so it won’t work for us. If you want to see her code in action for a different model, see her Medium post and/or her Colab example for cool stuff. But sadly, we must try other methods until

flashtorchis updated. So, moving on…

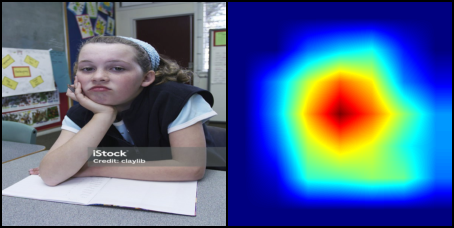

We’ll try a method known as “GradCAM”, which draws a blob around the area of an image that is most “salient” or “influential” in reaching a certain prediction-outcome.

!pip install pytorch-gradcam

from gradcam.utils import visualize_cam

from gradcam import GradCAM, GradCAMpp

from torchvision.utils import make_grid, save_image

from torchvision import transforms

device = 'cuda' if torch.cuda.is_available() else 'cpu'Collecting pytorch-gradcam

Downloading pytorch-gradcam-0.2.1.tar.gz (6.0 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 6.0/6.0 MB 16.7 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Requirement already satisfied: opencv-python in /usr/local/lib/python3.10/dist-packages (from pytorch-gradcam) (4.8.0.76)

Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from pytorch-gradcam) (1.23.5)

Building wheels for collected packages: pytorch-gradcam

Building wheel for pytorch-gradcam (setup.py) ... done

Created wheel for pytorch-gradcam: filename=pytorch_gradcam-0.2.1-py3-none-any.whl size=5247 sha256=6376682e2970e5decd7de13c1616d8cc1608ebaf94677874ed723a7aa9cf4b1a

Stored in directory: /root/.cache/pip/wheels/6f/f1/8f/96c81d13f617841f23cae192a77fea3e9e988d058ba9414f2c

Successfully built pytorch-gradcam

Installing collected packages: pytorch-gradcam

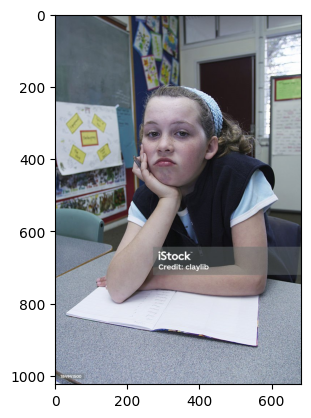

Successfully installed pytorch-gradcam-0.2.1Let’s grab an image for “bored”:

# dls.valid.items are the filenames in the Validation set, and they contain the directory/label names

label, file_num = 'bored', 5

img_path = [s for s in dls.valid.items if label in str(s)][file_num] # grab a filename

print(img_path)

image = load_image(img_path)

plt.imshow(image)

pred = learn.predict(img_path)

print(pred)

print(f'\nSo the label for this image is "{label}", but the model predicts "{pred[0]}".')scraped_images/bored/bored-student-picture-id184941500_weoYUwRrHn.jpg('bored', tensor(1), tensor([0.1977, 0.7994, 0.0028]))

So the label for this image is "bored", but the model predicts "bored".

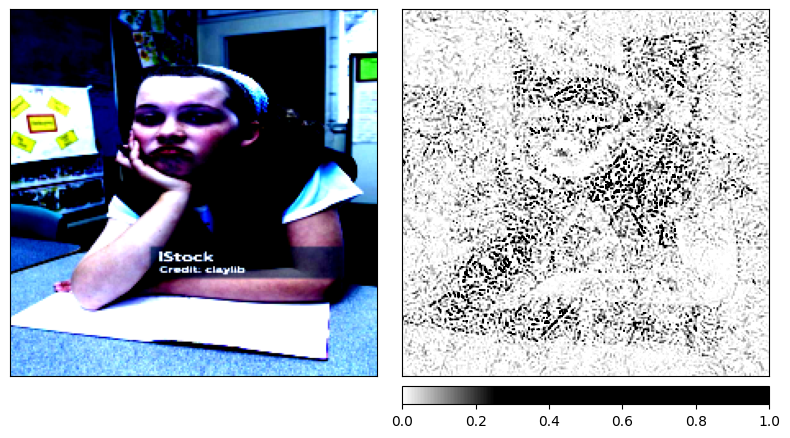

Why did the model reach that conclusion? What part of the image was salient to the model? Let’s generate a GradCAM map and take a look:

dl = learn.dls.test_dl([img_path])

x = first(dl)

torch_img = detuplify(dl.decode_batch(x)[0])

x = detuplify(x)

normed_torch_img = x

target_layer = learn.model[0]

gradcam = GradCAM(learn.model, target_layer)

mask, _ = gradcam(normed_torch_img)

heatmap, overlay = visualize_cam(mask, torch_img)

images = [-torch_img.cpu(), heatmap]

grid_image = make_grid(images)

transforms.ToPILImage()(grid_image)/usr/local/lib/python3.10/dist-packages/torch/nn/modules/module.py:1344: UserWarning: Using a non-full backward hook when the forward contains multiple autograd Nodes is deprecated and will be removed in future versions. This hook will be missing some grad_input. Please use register_full_backward_hook to get the documented behavior.

warnings.warn("Using a non-full backward hook when the forward contains multiple autograd Nodes "

/usr/local/lib/python3.10/dist-packages/torch/nn/functional.py:3737: UserWarning: nn.functional.upsample is deprecated. Use nn.functional.interpolate instead.

warnings.warn("nn.functional.upsample is deprecated. Use nn.functional.interpolate instead.")

Aside: Thanks to Benjamin Warner and Tanishq Abraham/@ilovescience on the FastAI Discord for help getting this GradCAM example working!

…So the red area is supposed to be the imporant part for influencing the prediction.

Let’s try a different salience method called “Integrated gradients”, using a package called Captum:

!pip install -Uq git+https://github.com/pytorch/captum.git Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Building wheel for captum (pyproject.toml) ... done#@title Hiding Integrated Gradient code details here, just press the run button ;-)

from captum.attr import IntegratedGradients, NoiseTunnel

from captum.attr import visualization as viz

from matplotlib.colors import LinearSegmentedColormap

model = learn.model.eval()

pred_label_idx = 2

transformed_img = normed_torch_img

input = normed_torch_img

integrated_gradients = IntegratedGradients(model)

attributions_ig = integrated_gradients.attribute(input, target=pred_label_idx, n_steps=200)

default_cmap = LinearSegmentedColormap.from_list('custom blue',

[(0, '#ffffff'),

(0.25, '#000000'),

(1, '#000000')], N=256)

_ = viz.visualize_image_attr_multiple(np.transpose(attributions_ig.squeeze().cpu().detach().numpy(), (1,2,0)),

np.transpose(transformed_img.squeeze().cpu().detach().numpy(), (1,2,0)),

["original_image", "heat_map"],

["all", "positive"],

cmap=default_cmap,

show_colorbar=True)WARNING:matplotlib.image:Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

Ok…that’s not very helpful. Let’s try to smooth it out a bit by adding some noise to the input.

.

If this next cell produces an “CUDA out of memory” error, then just nevermind for now:

#@title Hiding Captum "noise tunnel" code. Just press play...

noise_tunnel = NoiseTunnel(integrated_gradients)

attributions_ig_nt = noise_tunnel.attribute(input, nt_samples=10, nt_type='smoothgrad_sq', target=pred_label_idx)